If you find this research helpful, please cite (volume, number, pages coming soon)

@ARTICLE{VIUNet,

author={Kao, Peng-Yuan and Chang, Hsui-Jui and Tseng, Kuan-Wei and Chen, Timothy and Luo, He-Lin and Hung, Yi-Ping},

journal={IEEE Access},

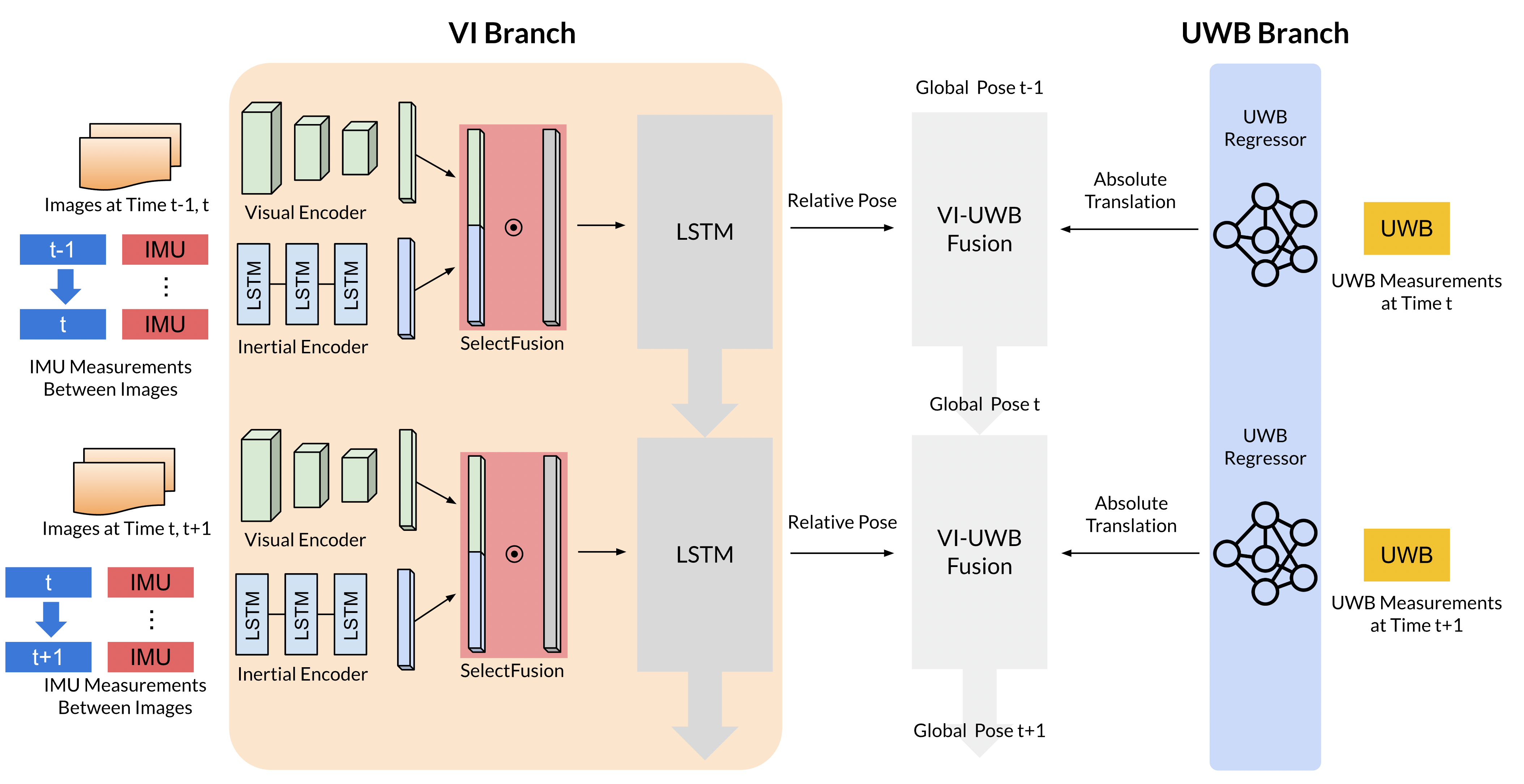

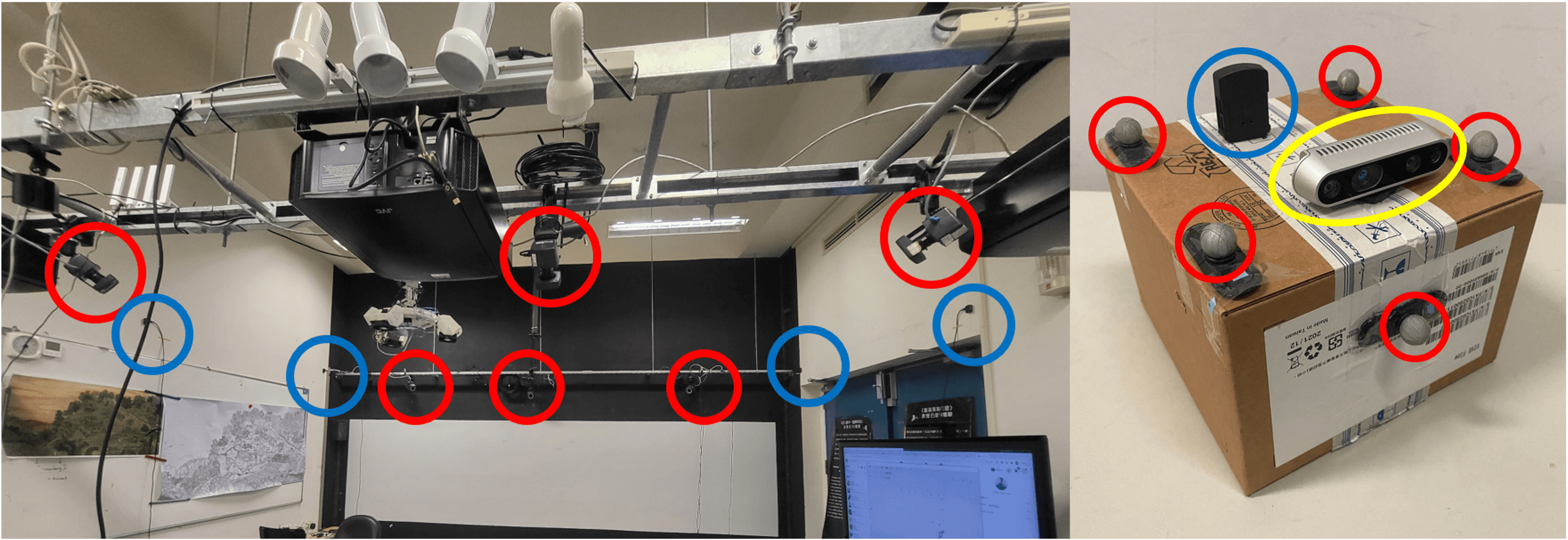

title={VIUNet: Deep Visual–Inertial–UWB Fusion for Indoor UAV Localization},

year={2023},

volume={},

number={},

pages={},

doi={10.1109/ACCESS.2023.3279292}}